Think about what strides we’ve made in artificial intelligence in the last 20 years. Driverless cars, ChatGPT, Siri, Deep Learning, and the list goes on. One little-known fact is that a group of Spelman College black women were doing things with artificial intelligence 20 years ago that, even today, only a small fraction of people could do or even think of doing. How do you program four-legged and two-legged humanoid robots to autonomously play soccer using computer vision, machine learning, and localization without the robot being remote controlled? How do you combine AI and robotics with the arts and healthcare to inspire the next generation of computer scientists and engineers around the country?

With the support of the Spelman president, Beverly Daniel Tatum, our science dean, Dr. Lily McNair, Spelman alumni and board members, and our sponsors such as the National Science Foundation, NASA, Coca Cola, Boeing, GM, GE, Apple and Google, the Spelman SpelBots traveled the world to compete in autonomous quadruped and humanoid soccer in Japan, Germany, Italy, and the U.S against some of the leading universities in the world that were doing research in AI and robotics two decades ago.

It was around March of 2004, when I went to Spelman College to interview for a position as an assistant professor in computer and information sciences. I was already an assistant professor at the University of Iowa in electrical and computer engineering but had read the book, The Purpose Driven Life, with my wife and decided that part of my God-given purpose was to help African American students succeed academically, vocationally and spiritually. As I shared in my book, Out of the Box: Building Robots, Transforming Lives, I interviewed at Spelman, Howard University, and had an informal interview at Morehouse.

When Spelman made me an offer, I wasn’t sure what I should do. But my wife said to me, “Andrew, don’t you want our daughters to have professors that really want to see them succeed.” And I decided to go even though many didn’t understand why I would leave a Big 10 research university for a small, historically black undergraduate liberal arts college for women. I would go there and see unlimited possibilities for my students and treat them as I would want a professor to treat and believe in my own daughters.

There are many stories I can tell about our experiences. My book just touches on the first team. When it was released I was working at Apple while on sabbatical from Spelman at the behest of the co-founder of Apple, Steve Jobs. He wanted me to help them hire more black engineers and I was able to do that. More importantly, these young women were pioneering AI and robotics role models, along with my colleague, Dr. Ayanna Howard, who mentored one of our first SpelBots students, Aryen, before the team was formed at NASA’s Jet Propulsion Lab. Aryen contacted me while I was moving to Spelman and I asked her if she wanted to start a RoboCup robotics team. She volunteered to be our first co-captain, along with a student named Brandy.

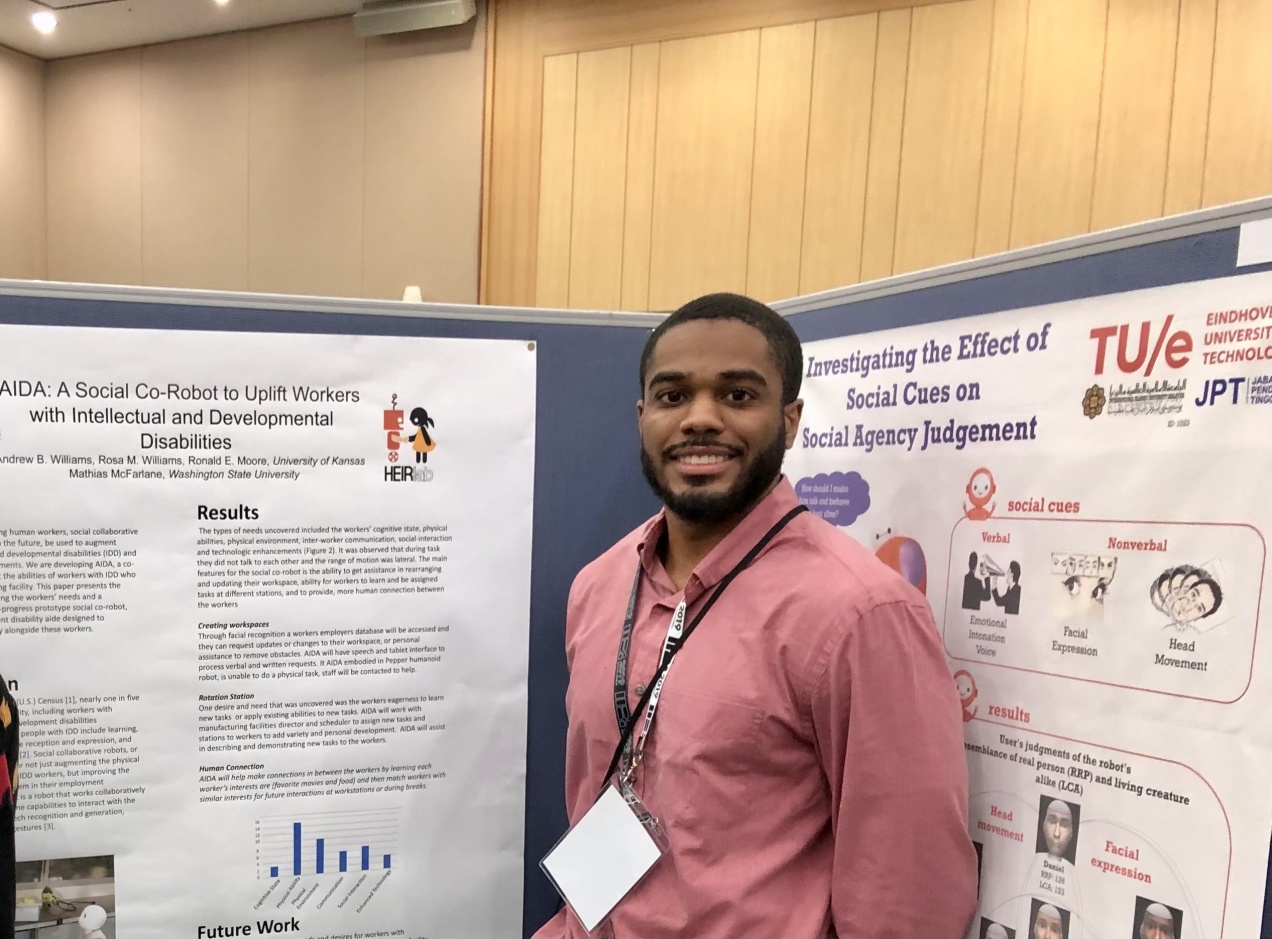

The AI and robotics topics that the undergraduate Spelman students had to grapple with to compete with graduate students at Georgia Tech, Carnegie Mellon, University of Texas Austin, and other international teams were daunting. The AI and robotics topics they worked on included localization, computer vision, motion, locomotion, teamwork, and decision making. I purposely made sure that I didn’t put limits on them and believed in them. There were several teams of SpelBots who did amazing things. Like the team that tied in a RoboCup Japan Open autonomous humanoid robotics championship match with Japan’s Fukuoka Institute of Technology’s team led by co-captains Jonecia and Jazmine. (There are so many more SpelBots students I can name here.)

So here’s to remembering the women of the Spelman College SpelBots RoboCup robotics team that competed in the U.S., Europe and Asia. Watch this video made by the National Science Foundation, and remember to help all of our young women dream big and pioneer in Science, Technology, Engineering, and Math (STEM).

Picture: Spelman College SpelBots students Jonecia, Jazmine, Ariel, Naquasha, and Dr. Andrew B. Williams at RoboCup 2009 Japan Open in Osaka, Japan Photo credit © Adrianna Williams, used with permission

© 2024 Andrew B. Williams

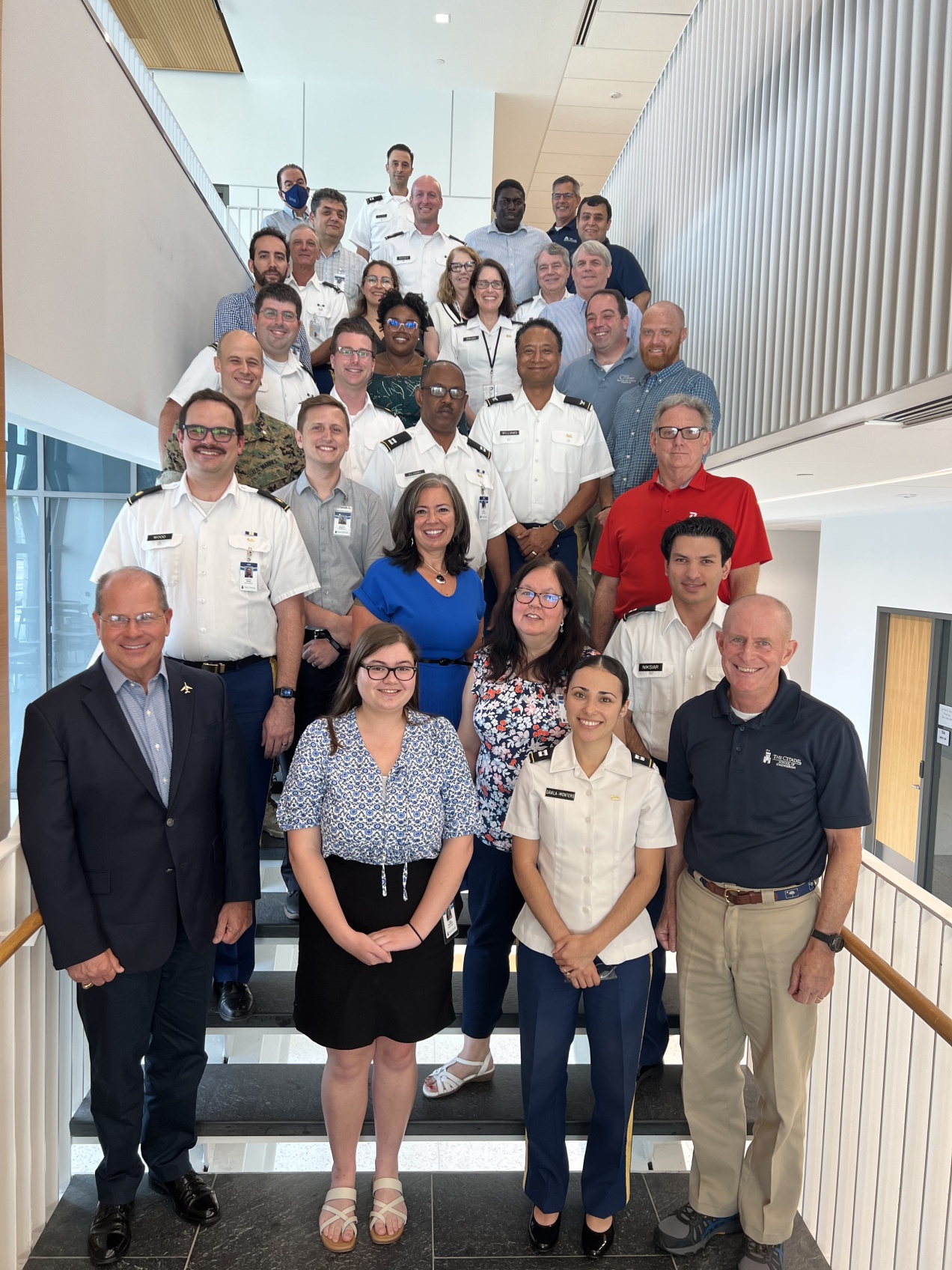

About the Author: Andrew B. Williams is Dean of Engineering and Louis S. LeTellier Chair for The Citadel School of Engineering. He was recently named on of Business Insider’s Cloudverse 100 and humbly holds the designation of AWS Education Champion. He sits on the AWS Machine Learning Advisory Board and is a certified AWS Cloud Practitioner. He is proud to have recently received a Generative AI for Large Language Models certification from DeepLearning.AI and AWS. Andrew has also held positions at Spelman College, University of Kansas, University of Iowa, Marquette University, Apple, GE, and Allied Signal Aerospace Company. He is author of the book, Out of the Box: Building Robots, Transforming Lives.